The Hidden Security Risk in AI Integrations

Critical security vulnerabilities in MCP protocol and AI integrations. Learn how trusted connections become attack vectors in LLM applications and how to protect them.

The Hidden Security Risk in AI Integrations: Lessons from the MCP Protocol

“It worked like magic — until it didn’t. All because of one connection I trusted too easily.”

As artificial intelligence becomes more integrated into our daily workflows, we’re witnessing the emergence of powerful new protocols that connect AI models to the real world.

The Model Context Protocol (MCP), introduced by Anthropic in November 2024, represents one of the most significant advances in this space. But with great power comes great responsibility — and as recent security discoveries show, significant risk.

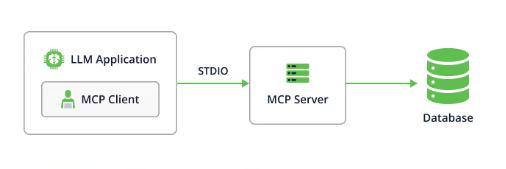

Understanding MCP: The USB-C for AI Applications

Think of the Model Context Protocol as a universal adapter for artificial intelligence. Just as USB-C standardized how we connect devices to peripherals,

MCP standardizes how AI models connect to external tools, databases, and services. MCP provides a universal, open standard for connecting AI systems with data sources, replacing fragmented integrations with a single protocol.

When you ask Claude Desktop to “Send this document to Jane and create a Jira ticket,” MCP translates that natural language command into structured calls that actually interact with your email client and project management system.

This happens through specialized MCP servers — lightweight programs that act as bridges between your AI assistant and specific services or data sources.

The protocol has gained remarkable traction since its release. By early 2025, MCP had become widely adopted, with popular developer tools like Cursor, Replit, Zed, and Sourcegraph supporting it.

Companies like Block and Apollo have integrated MCP into their systems, recognizing the value of standardized AI-data interfaces.

The Bridge That Became a Backdoor

This is where our story takes a darker turn.

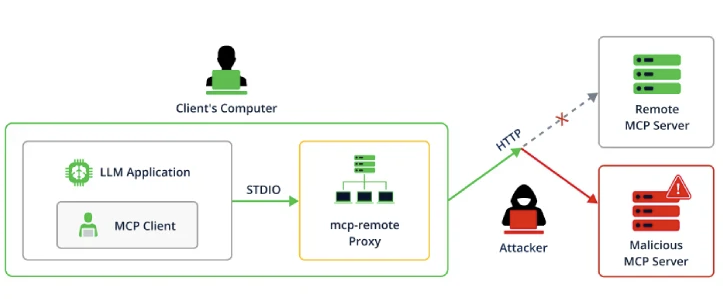

One of the most popular tools in the MCP ecosystem is mcp-remote, a proxy that allows

AI applications to connect to remote MCP servers instead of just local ones.

This tool has been downloaded over 437,000 times, making it a critical piece of infrastructure for many AI-powered workflows.

In July 2025, security researchers at JFrog discovered something alarming: CVE-2025-6514 – a critical (CVSS 9.6) security vulnerability in the mcp-remote project that allows attackers to trigger arbitrary OS command execution on the machine running mcp-remote when it initiates a connection to an untrusted MCP server.

How the Attack Works

The vulnerability exploits a seemingly innocent part of the connection process.

When mcp-remote connects to a new server, it performs an OAuth handshake to

establish authentication. During this process, the server can specify an “authorization endpoint” URL.

The vulnerability allowed malicious servers to craft special URLs that,

when processed by mcp-remote, would execute arbitrary commands on the user’s machine.

Picture this scenario: You discover an exciting new Claude Desktop extension

that promises to revolutionize your workflow.

You install it without checking where its MCP server actually points.

The catch is that server is in fact controlled by an attacker.

The moment Claude Desktop tries to connect through mcp-remote, the malicious

server responds with a weaponized authorization URL.

Within seconds, the attacker has gained full control of your system — no clicking, no downloading, no user interaction required.

The Broader Implications for AI Security

This incident reveals something profound about the new security landscape we’re entering. We’re moving beyond the era where AI simply answers questions or generates text. Modern AI systems are becoming active participants in our digital workflows, with the ability to read files, execute commands, and interact with critical systems.

The security model that worked for traditional software doesn’t fully account for the unique risks introduced by AI integration.

When an AI assistant can autonomously make API calls, run database queries, or even execute system commands based on natural language instructions, the attack surface expands dramatically.

Consider the implications: If a malicious actor can compromise an MCP server that your AI regularly connects to, they’re not just gaining access to data — they’re potentially gaining the ability to manipulate every system your AI can reach. This could include your email, your cloud infrastructure, your databases, or even your development environments.

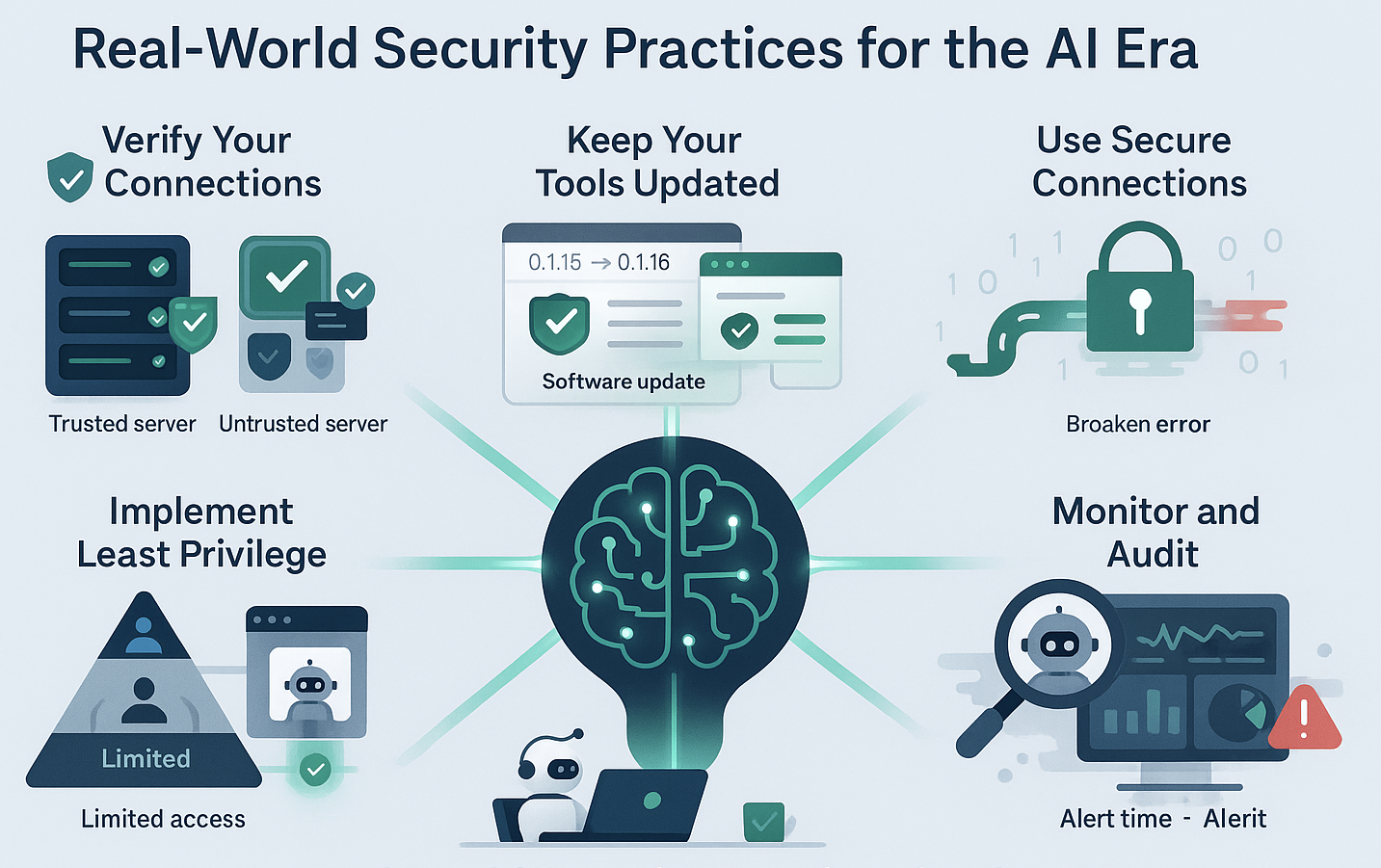

Real-World Security Practices for the AI Era

The good news is that awareness of these risks is growing, and practical solutions are emerging. Here’s how teams can protect themselves while still leveraging the power of AI integration:

Verify Your Connections

Just as you wouldn’t connect to an untrusted Wi-Fi network with sensitive data, you shouldn’t connect your AI to untrusted MCP servers. Always verify the source and reputation of any MCP server before adding it to your configuration.

When possible, stick to servers from established organizations or open-source projects with active maintenance and security reviews.

Keep Your Tools Updated

The CVE-2025-6514 vulnerability was fixed in mcp-remote version 0.1.16. This incident underscores the importance of maintaining up-to-date dependencies in your AI stack. Set up automated monitoring for security updates to any MCP-related tools in your environment.

Use Secure Connections

Always configure your MCP connections to use HTTPS rather than HTTP. This prevents man-in-the-middle attacks where local network attackers could redirect your AI’s connections to malicious servers.

Many of the recent MCP vulnerabilities could have been mitigated with proper encryption and certificate validation.

Implement the Principle of Least Privilege

Consider what level of access your AI actually needs. Does it need to run arbitrary system commands, or can it accomplish its tasks with more limited permissions? Sandbox experimental AI integrations, run them in containers, or use dedicated user accounts with restricted privileges.

Monitor and Audit AI Activities

Traditional security monitoring needs to evolve to account for AI-driven activities. Log the connections your AI makes, the commands it executes, and the data it accesses. Unusual patterns in AI behavior can be early indicators of a compromised integration.

The figure bellow summarizes these practices into a nice infographic.

Learning from Other MCP Vulnerabilities

The mcp-remote vulnerability isn’t an isolated incident.

The MCP ecosystem has seen several other security issues that provide additional lessons:

CVE-2025-49596 in the MCP Inspector tool carries a CVSS score of 9.4 and allows unauthenticated remote code execution. This vulnerability demonstrated how developer tools, even those meant for testing and debugging, can become attack vectors when improperly secured.

Additionally, researchers have discovered vulnerabilities in Anthropic’s Filesystem MCP Server that could allow attackers to break out of sandboxes and manipulate files outside the intended directory scope.

These discoveries highlight the importance of thorough security testing for any MCP implementation.

The Path Forward

The security challenges revealed by these vulnerabilities shouldn’t discourage us from embracing AI integration — they should inform how we do it safely.

The power of protocols like MCP to create truly useful, context-aware AI systems is undeniable. The key is building security considerations into the foundation of these systems rather than treating them as an afterthought.

Organizations implementing MCP should establish clear governance around AI integrations. This includes maintaining inventories of connected servers, implementing regular security reviews, and establishing incident response procedures for AI-related security events.

For developers building MCP servers, the lesson is clear: security must be a primary consideration from the start.

This means implementing proper authentication, input validation, secure communication protocols, and thorough testing for injection vulnerabilities.

Final Thoughts: Balancing Innovation and Security

The emergence of protocols like MCP represents a fundamental shift in how we think about AI capabilities. We’re moving from isolated language models to integrated AI systems that can take meaningful action in the real world. This transformation opens up incredible possibilities for productivity and automation.

But as the recent vulnerabilities demonstrate, this power comes with corresponding risks. The same mechanisms that allow an AI to helpfully manage your calendar or analyze your data can become attack vectors if not properly secured.

The solution isn’t to retreat from AI integration but to approach it with the same security mindset we apply to other critical infrastructure.

This means assuming that attacks will happen, designing systems to contain their impact, and maintaining constant vigilance for new threats.

As we continue to build the infrastructure for AI-human collaboration, security must be woven into the fabric of these systems.

The alternative — powerful AI tools that can be turned against their users — is simply too dangerous to accept.

The future of AI is bright, but only if we’re smart about the bridges we build to get there.

Immediate Action Items:

If you’re using MCP-based tools today, take these steps to secure your environment:

- Update

mcp-remoteto version 0.1.16 or later immediately - Audit your MCP server configurations and remove any insecure HTTP connections

- Implement monitoring for AI-driven activities in your environment

- Establish a process for vetting new MCP servers before deployment

- Subscribe to security advisories for any MCP tools in your stack

The era of AI integration is here. Let’s make sure we get the security right from the beginning.